K8S不負責任介紹

Kubernetes (或簡稱K8s) 是一套高成熟度的開源軟體系統

用於管理容器化的工作或服務,由 Google 於 2014 年對外發佈。

K8S有以下幾個優點~

- 可以跑在任何地方

- 開源軟體系統

- 確保持續提供服務

- 設定副本與負載平衡

- 滾動更新

環境準備

- 一個或多個 Master

- 一個或多個 Worker Nodes

- Kubeadm安裝K8S

- 安裝Docker

不負責任K8S圖解

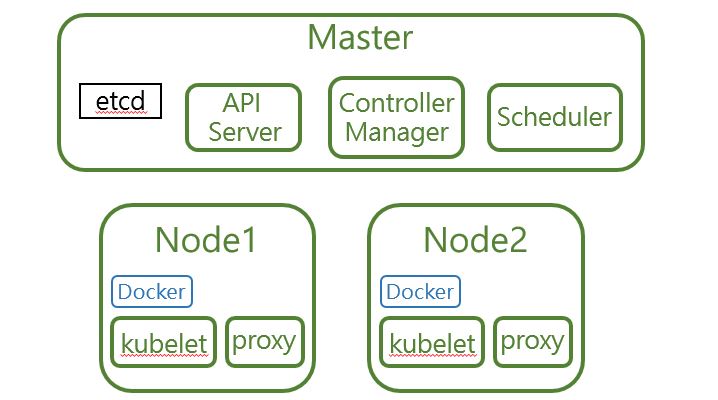

這是一個最陽春的K8S集群,有一個Master和兩個Node

以下是K8S集群內元件的介紹

- kubectl: 負責在機器上運行內容,屬於最基礎的組件。

- API Server: 負責將東西放入etcd,並管裡身分驗證。 例如:誰可以將什麼東西放入etcd?

- Controller Manager: 分配Pod在哪個node上運行,運行幾個,就像調度員一樣。

- etcd: K8S的大腦,所有的狀態都儲存在etcd。

- kubelet: 和master連接,告訴Docker去run container。

- Proxy: 將請求轉發到正確的容器。

- Scheduler: 依照你希望的配置份配Pod的位置,若沒設訂,Scheduler會幫你安排。

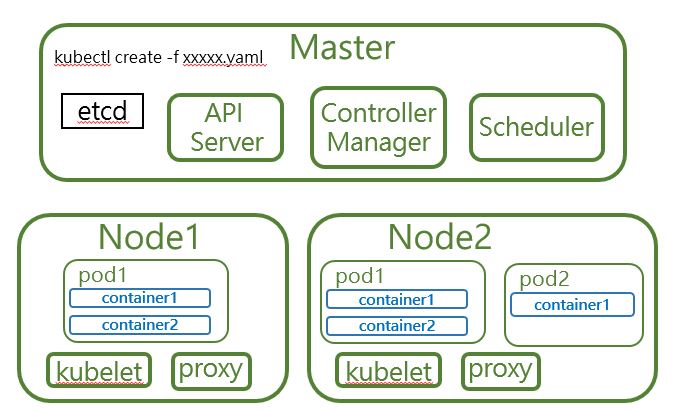

- Pod: K8S元件最小單位,Node內可以有很多Pod,Pod內可有很多Container。

當我們在Master節點下kubectl create -f xxxx.yaml 指令,K8S就會開始動作,變成如下圖

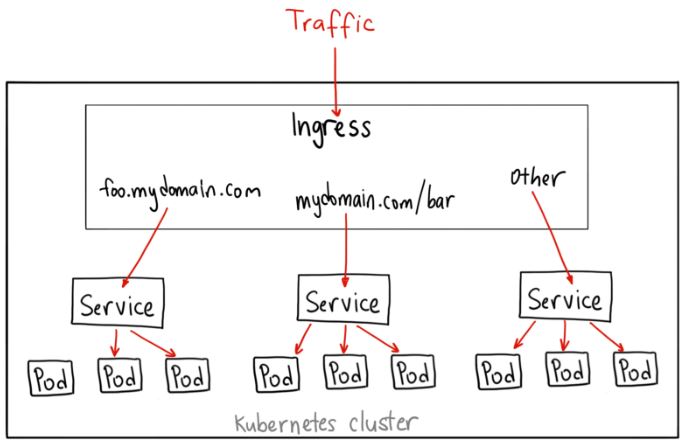

K8S-Ingress 介紹

當你想要將佈署在K8S上的服務對外開放時,需要在yaml檔裡設定Service的Type

- ClusterIP: 預設的Service type,會給你的Service一組只有K8S集群內部可連的IP。

- NodePort: 給你的Service一組預設範圍在30000-32767的port,讓Service可以透過NodeIP+port訪問。

- LoadBalancer: 給你的Service一組外部IP,此type僅限於雲服務可用。 例如AWS、GCP

但是在本地的VM,不適用LoadBalancer,如果想要將自己剛建立好的POD對外發佈讓別人可以連,只能用NodePort嗎?這樣每次都要用NodeIP:Port才能連,很不方便也暴露很多不必要的資訊,所以~

圖片連結https://medium.com/google-cloud/ - Kubernetes NodePort vs LoadBalancer vs Ingress? When should I use what?

顧名思義,ingress就是一個進入點,他在service前就先設定好該連去哪個service,扮演著smart router的角色。

來設定自己的Ingress吧

step1. 需先創一個default-backend.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http-backend

labels:

k8s-app: default-http-backend

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: gcr.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: kube-system

labels:

k8s-app: default-http-backend

spec:

ports:

- port: 80

targetPort: 8080

selector:

k8s-app: default-http-backend

kubectl create -f default-backend.yaml

———-我是分隔線———-

step2. 設定ingress的ServiceAccount

創一個nginx-ingress-controller-role.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: nginx-role

rules:

- apiGroups:

- ""

- "extensions"

resources:

- configmaps

- secrets

- endpoints

- ingresses

- nodes

- pods

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- list

- watch

- get

- update

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- apiGroups:

- ""

resources:

- events

verbs:

- create

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: nginx-role

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-role

subjects:

- kind: ServiceAccount

name: nginx

namespace: kube-system

kubectl create -f nginx-ingress-controller-role.yaml -n kube-system

———-我是分隔線———-

step3. 設定ingress的configmap

創一個nginx-ingress-controller-config-map.yaml1

2

3

4

5

6

7

8apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-ingress-controller-conf

labels:

app: k8s-app

data:

enable-vts-status: 'true'

kubectl create -f nginx-ingress-controller-config-map.yaml -n kube-system

———-我是分隔線———-

step4. Run一個nginx的deployment

建一個nginx-ingress-controller.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-controller

spec:

replicas: 1

selector:

matchLabels:

k8s-app: nginx-ingress-controller

template:

metadata:

labels:

k8s-app: nginx-ingress-controller

spec:

# hostNetwork makes it possible to use ipv6 and to preserve the source IP correctly regardless of docker configuration

# however, it is not a hard dependency of the nginx-ingress-controller itself and it may cause issues if port 10254 already is taken on the host

# that said, since hostPort is broken on CNI (https://github.com/kubernetes/kubernetes/issues/31307) we have to use hostNetwork where CNI is used

# like with kubeadm

hostNetwork: true

terminationGracePeriodSeconds: 60

serviceAccount: nginx

serviceAccountName: nginx

containers:

- image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.12.0

name: nginx-ingress-controller

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=\$(POD_NAMESPACE)/nginx-ingress-controller-conf

kubectl create -f nginx-ingress-controller.yaml -n kube-system

———-我是分隔線———-

step5. 可以來設定ingress囉~

創一個nginx-ingress.yaml1

2

3

4

5

6

7

8

9

10

11

12

13apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: test.rita.com #你要設定啥都可以

http:

paths:

- backend:

serviceName: nginx-ingress

servicePort: 18080

path: /nginx_status

kubectl create -f nginx-ingress.yaml -n kube-system

———-我是分隔線———-

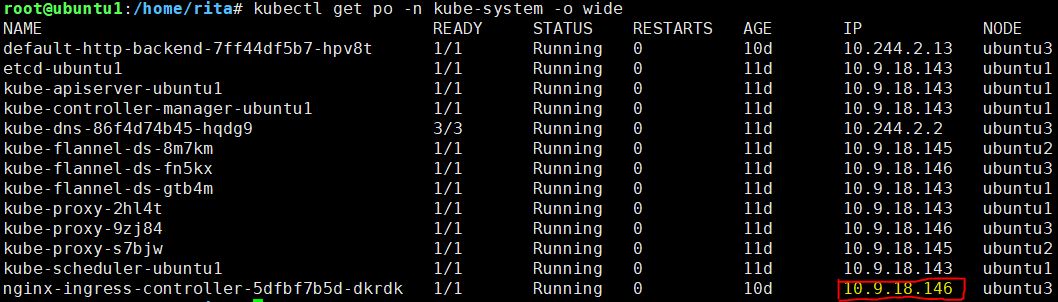

step6. 用kubectl get po -o wide -n kube-system

看一下你的nginx-ingress-controller是Run在哪一台,把那台的IP和剛才設定的ingress網址加到本機host

所以host就設定↓

10.9.18.146 test.rita.com

———-我是分隔線———-

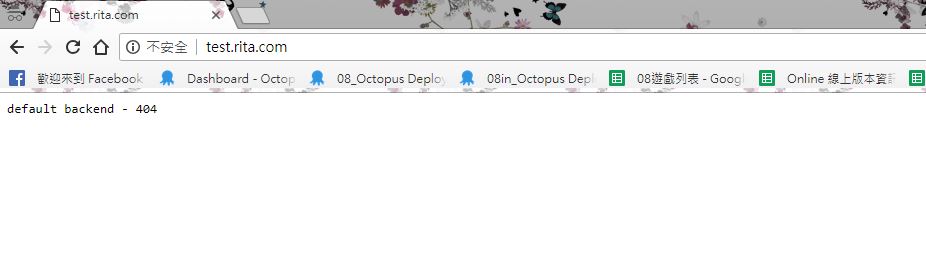

來測試一下是不是連的到default backend~~

成功惹~

快去試試吧!!